Artificial intelligence has become a daily life assistant for your students. They reach out to AI tools for information, advice, and fun.

However, besides its benefits, this switch also increases the likelihood of finding (and creating!) misinformation on the internet. Fake content can affect not only learning integrity but also student well-being and the school’s cybersecurity.

Let’s explore the AI-related challenges that young users face today and how to protect them online.

How AI Shapes the Student’s Everyday Life

It’s not easy being a teenager in the AI era.

Unlimited access to artificial intelligence enables everyone to create content quickly. This seems like a quick win, especially when researching information and doing homework.

But, while 80% of UK students use AI for their schoolwork, only 47% of UK students can identify AI misinformation, according to an Oxford University report.

Artificial intelligence is beneficial for students and teachers in educational settings, but it also poses risks. The main one: presenting false content as true. While GenAI outputs appear expert and trustworthy, they can still mislead users of all ages.

Young people are particularly vulnerable to believing what’s not true. The younger, the less eager to consider the trustworthiness of the information, the Future Report shows. Used to fast-paced life, they quickly decide whether the content is reliable or not.

There should be an obligation to tag this content that was created by AI, some kind of watermark. There is more and more AI-generated content, which is more difficult to recognize at first glance, said an 18-year-old Polish student.

If they trust and share fake content, it can lead to severe consequences.

AI-Related Challenges for Students & Solutions

Online misinformation has been increasing for several years, but the AI revolution has given it an extra boost. Your students are growing up in an always-connected world, so using and consuming artificial intelligence was a natural step for them.

As school admins and leaders, you need to stay up-to-date with the new technologies your students are using. Fake AI-based content may seriously impact their well-being, safety, cybersecurity, and learning. These are just a few current challenges for teenagers:

1. AI Assistants, New False Friends

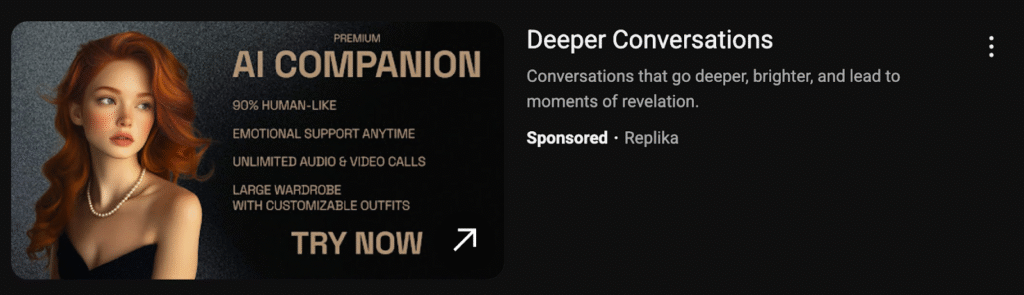

Students using AI tools for schoolwork are more likely to talk to chatbots about their personal issues. Almost half of US students did so, discussing their mental health or emotions with virtual assistants. Even more alarming: 20% of high school students know someone who has a “romantic relationship” with an AI chatbot.

That’s not only about the bad mental health condition of students, but also about trusting algorithms more than family and friends. Experts stress that no chatbot can replace a healthy human conversation, as it will tell the users what they want to hear and can’t understand the nuances of social or ethical topics.

Furthermore, by sharing their personal information with AI companions, users often grant companies the right to utilize that data. It may lead to greater cybersecurity risks.

An example of an AI companion advertisement

GAT Shield Solution for School Admins:

Filtering Disturbing Keywords

Prevent your students from engaging in conversations with AI chats about mental health issues. Set up alerts to detect distressing keywords on any visited page when logged in to their Google account. Include vocabulary related to depression, self-harm, suicide, bullying, disordered eating, etc.

You get notified whenever a student types a forbidden word. In such cases, you can schedule the AI chatbot page to automatically close or redirect the user to another website (e.g., a mental health support site).

2. Deepfakes, Real Pain

Undoubtedly, artificial intelligence stimulates creativity, but sometimes it just goes in the wrong direction. Deep fake images and videos may have been created just for fun, but they can lead to real harm: misinformation and cyberbullying. Your students can become both their perpetrators and victims.

Did you know that almost 90% of explicit AI-generated content has been created by young users? While many law systems and school policies are still updating for this new non-consensual use of personal image, there is no doubt: it’s a form of sexual abuse. Unfortunately, increasingly common among school kids.

GAT Shield Solution for School Admins:

Real-Time Alerts for AI Deepfake Sites

Get notified when a student visits a website or application related to deepfake creation and react quickly. To keep an eye on each student’s online activity in the Chrome browser, Chromebook, and Gmail, configure an alert for relevant keywords. It will detect and block these keywords in text and images based on text.

In GAT Shield, you can also block specific websites and web applications, so students won’t be able to access them at all. If they attempt to break any rule on the school device, you can automatically display educational resources on the AI deepfake impact just like this.

3. AI Scams, True Loss

Artificial intelligence has no moral limits. Therefore, it also helps cybercriminals to create much more convincing scams. In just a few minutes, AI can generate an entire website modeled on a real one, complete with trustworthy-looking pictures, financial reports, a blog, and contact information.

However, phishing has become even more sophisticated today. Scammers have begun using fake video and audio content based on real people’s identities. AI can generate alleged video and voice messages from friends, teachers, or family members, as long as their recordings are publicly available. Although this is still a new ground of cyberattacks, an AI voice cloning scam has already cost 15.000 dollars to a mother who believed that her daughter had been kidnapped.

GAT+ Solution for School Admins:

Comprehensive Phishing Protection

First, look for signs of traditional phishing to prevent your data loss. They include unexpected emails, unusual user inbox activity, frequent password resets, urgent language, and suspicious links and attachments.

Audit all Gmail activity in your school domain, searching for specific keywords, file types, and unusual behaviour using the comprehensive functionalities of GAT+.

Enforce two-factor authentication, or even better, Multi-Factor Authentication, on all users to decrease the risk of account takeover and identity theft. Get alerted when somebody in your school disables 2FA; that can be a red flag for a phishing attack.

4. Wrong AI Data, Failed Homework

Students using ChatGPT for their assignments was one of the first challenges of AI in schools. In 2025, at least 35% European students are using AI tools for homework. Many schools now allow limited use of AI and are aware that students may copy and paste AI content. However, some students don’t realize that chatbots can also cheat them.

AI assistants can misrepresent information, rely on outdated data, and confuse facts with opinions. Leading chatbots have distorted 45% of media news, according to a DW study. In effect, using AI as a shortcut for homework can result in highly ineffective learning outcomes.

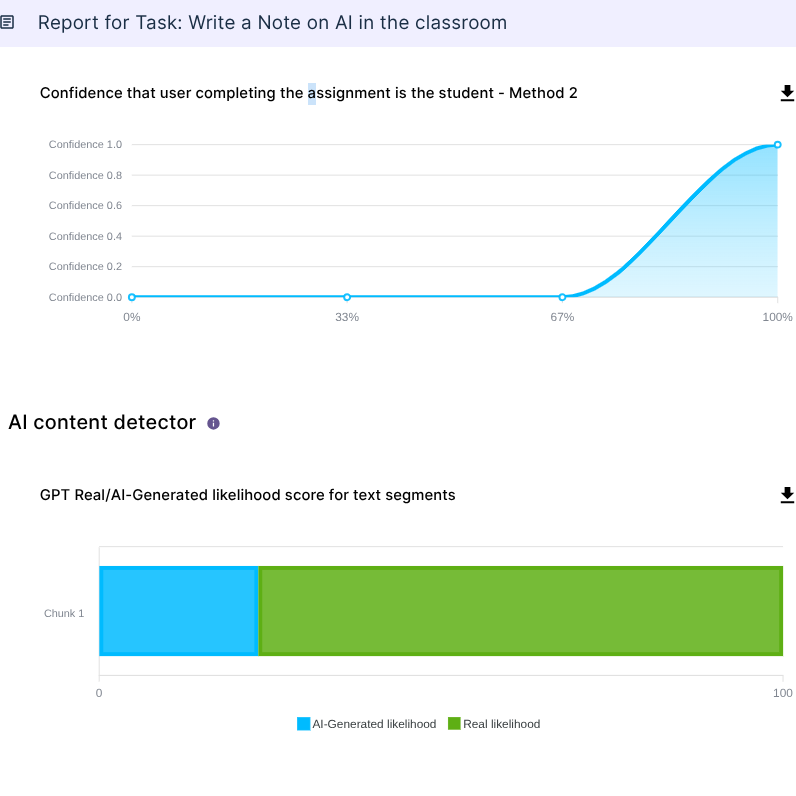

Taskmaster Solution for School Admins:

Early AI-Content Detection in Student Assignments

Verify with the Taskmaster tool whether the assignments submitted by students in Google Classroom have been written by them alone. In the report, you can see which parts of the text have been directly pasted or written by a student through the analysis of their unique writing patterns. Additionally, you can view the AI-generated likelihood score for the entire text.

If you detect any signs of AI use, suggest that students receive frequent reminders that AI chatbots may be incorrect and that their output should always be double-checked with other reliable sources.

Be Aware and Prevent Misleading AI in Google Workspace

For everyone at your school, awareness of AI misuse is key. Algorithms don’t know moral limits, unless humans establish them. Proactive prevention will protect your students from AI-generated misinformation, harmful behavior, and cybersecurity risks.

- Foster critical thinking towards online information and interactions

- Educate on how to recognize AI-generated content

- Show the excellent effects of leveraging AI tools to create innovation

- Look for early signs of mental health struggles and provide support

- Implement strong cybersecurity measures (web filtering, real-time alerts, online activity monitoring, copy-paste blocking) to limit AI misuse

Artificial intelligence is a powerful tool that can harm or boost every student’s potential. Create a safe and secure Google Workspace for Education space, so that AI, combined with students’ creativity and digital skills, will lead them to unprecedented results.

Join our newsletter for practical tips on managing, securing, and getting the most out of Google Workspace, designed with Admins and IT teams in mind.