Modern-day problems (require modern-day solutions)

With the rise of AI being used to help us achieve mundane tasks, we would rather not do. So, too, has there been a rise in the number of nefarious use cases for the new technology.

Students who have been brought up with AI will come up with the first new use cases, so it’s important to try to stay one step ahead.

It has been well documented that some bad actors are using this technology to generate artificial imagery of their peers in scantily clad outfits and create explicit nude photos, otherwise known as ‘deepfake nudes’.

GAT Shield can already stop students from searching for these tools and alert Google Workspace for Education administrators about students using AI resources for malevolent use cases.

Set up Alerts for AI Deepfake Nude Sites #

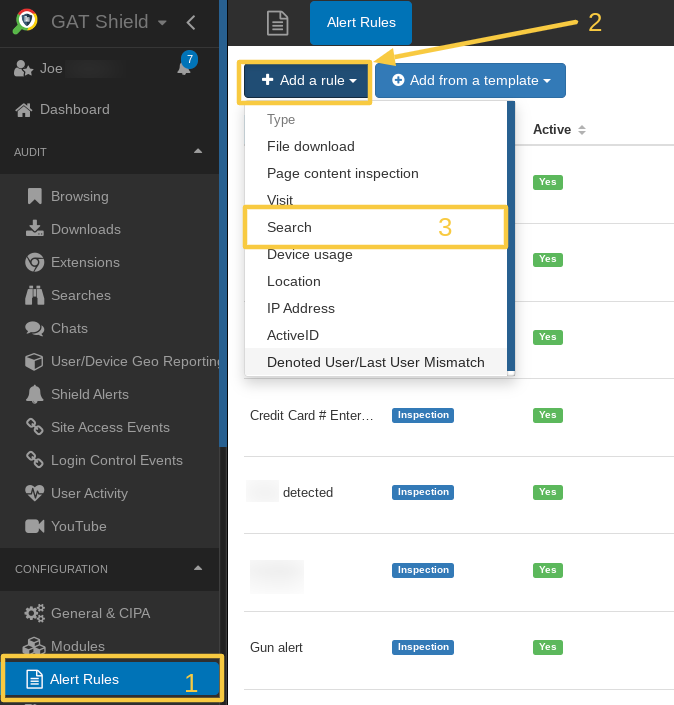

To set this up, go to GAT Shield > Alert Rules.

Click ‘Add a rule‘ to create a new rule.

Choose ‘Search‘ from the dropdown menu.

Start Configuring the Alert Rule #

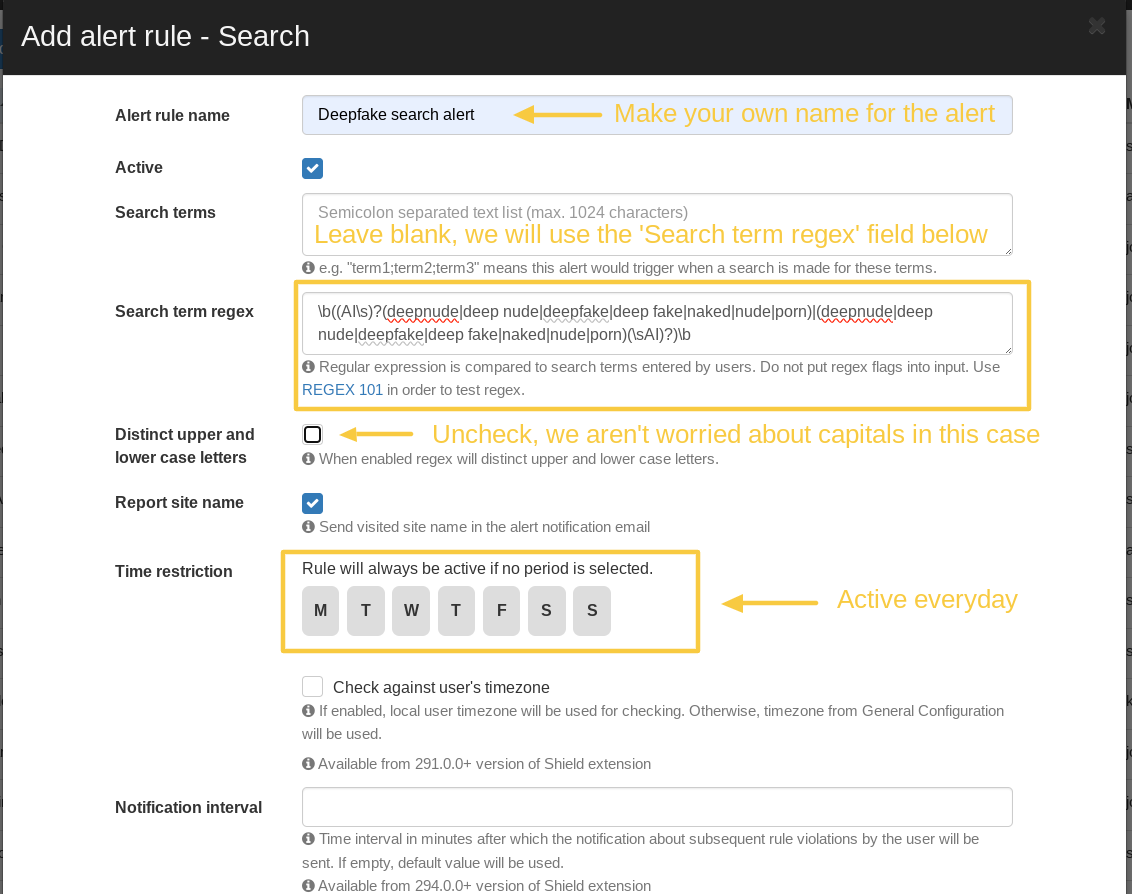

You will be presented with the modal below.

Give your alert rule a name, and enter the regex pattern below.

\b((AI\s)?(deepnude|deep nude|deepfake|deep fake|naked|nude|porn)|(deepnude|deep nude|deepfake|deep fake|naked|nude|porn)(\sAI)?)\b|\b((AI\s(deep|fake))|((deep|fake)\sAI))\b

Understanding the Regex Elements #

Let’s break down the regex together (if you don’t care about how it works, you can skip to ‘Finish configuring the alert rule‘)

\bis a word boundary. This means the pattern will only match if the keyword is a whole word, not part of another word.(AI\s)?matches the word “AI” followed by a space, but this is optional due to the?.(deepnude|deep nude|deepfake|deep fake|naked|nude|porn)matches any one of these keywords.- The

|symbol represents OR, so the regex will match if either the pattern before or after the|is found. (deepnude|deep nude|deepfake|deep fake|naked|nude|porn)(\sAI)?matches any keywords followed by “AI”, but “AI” is optional.- This is followed by

|again to represent OR. \b((AI\s(deep|fake))|((deep|fake)\sAI))\blooks for the words ‘deep’ and ‘fake’ but only when they are used in conjunction with ‘AI’ as deep and fake are not bad words on their own, and it is okay to search for these words under normal circumstances. The question mark is missing this time, as we require ‘AI’ to be used with these words to trigger the alert.

How the Regex Works #

This regex will match any of the terms ‘deepnude’, ‘deep nude’, ‘deepfake’, ‘deep fake’, ‘naked’, ‘nude’ and ‘porn’.

The alert will trigger if the search query has ‘AI’ either before or after any of the words. However, having ‘AI’ in the search query is optional for the alert triggering. The alert will trigger regardless of ‘AI’ being in the query (we don’t want students searching these words anyway).

On top of that, the regex will match if the terms ‘deep’ or ‘fake’ are searched, but these two terms must be preceded or followed by the term ‘AI’. We don’t care if ‘deep’ or ‘fake’ are searched individually or independently, as these are regular words that don’t usually have negative connotations.

Protip: You can use your favourite AI tool to help you configure your own regex.

Finish Configuring the Alert Rule #

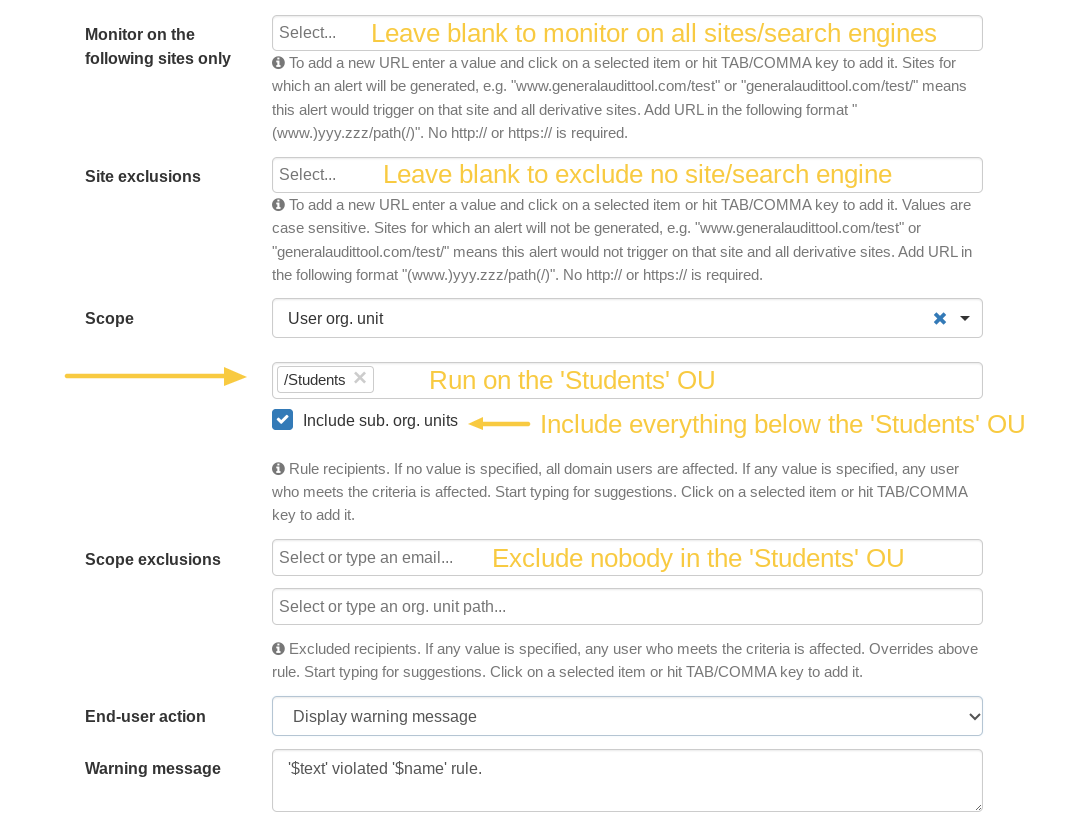

You can decide which accounts the alert runs on and how the accounts are affected when the alert triggers. Most of these options will differ for each organisation, but a popular example is shown below.

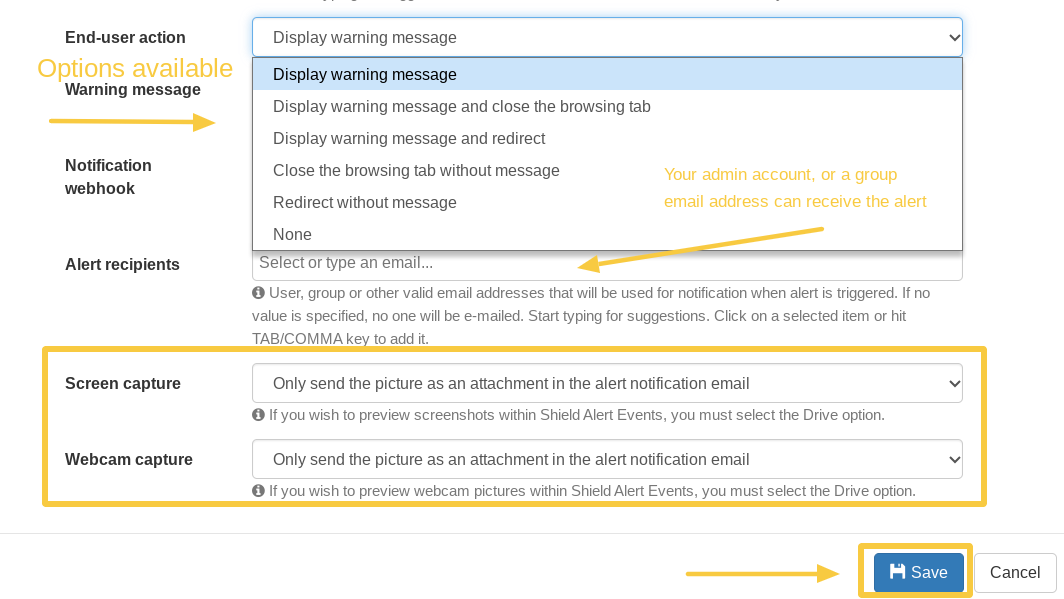

Several options are available when configuring the ‘End-user action’. If you would prefer that the account did not indicate that it tripped your alert, you can select ‘None‘ in this section.

If desired, you can take a screenshot of the search page at the time and a webcam capture to ensure the alert rule violator and the account holder are the same person.

When you are finished, click ‘Save‘.

You have now helped put a stop to the illegitimate use of cutting-edge technology, such as AI deepfake nudes. At the same time, you can keep people at your organization safe from harm and bullying.